Hardware

Starting in December the environment will contains a freebsd based router/firwall and a single enterprise class server

- sitka -- a RiverBed Stealhead CX-770

- tk2018 -- a HP ProLiant DL380 (g7) .

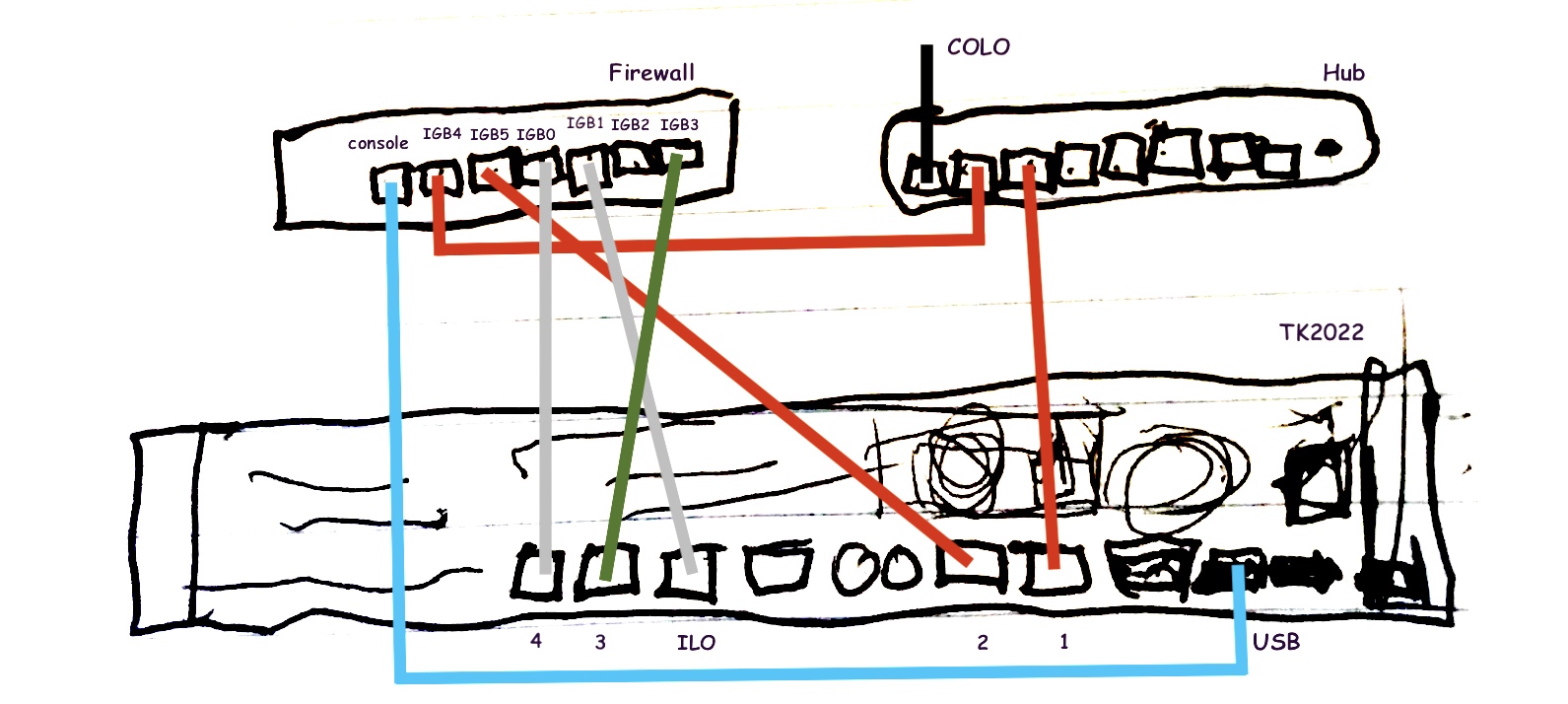

Network

The network is divided into 3 segments

- 192.168.31.0/24 a private administrative lan

- 10.0.0.0/24 wireguard lan

- 198.202.31.129/25 A public facing lan.

The host itself does not have any public facing interfaces. It only accessible though the wireguard protected admin lan. The containers, which handle all public facing work do so via an anonymous bridge configuration, allowing them to access the internet directly without allowing external access to the underlying servers.

As we move forward the unfiltered interface used by the public facing containers will eventually be replaced by a filtered interface through the firewall.

Sitka's Network Config

| sitka ports | |||

|---|---|---|---|

| port | Interface | IP Address/mask | purpose |

| igb0 | bridge0 | 192.168.31.159/24 | internal / admin lan |

| igb1 | bridge0 | ||

| igb2 | N/A | N/A | N/A |

| igb3 | igb3 | ?.?.?.?/?? | TBD |

| igb4 | igb4 | 198.202.31.132/25 | |

| igb5 | igb5 | 0.0.0.0/32 | firewalled public interface |

TK2022's Network Config

| tk2022 ports | ||||

|---|---|---|---|---|

| port | Interface | IP Address/mask | linux device | purpose |

| 1 | br0 | 0.0.0.0/32 | enp3s0f0 | unfiltered public interface |

| 2 | br2 | 0.0.0.0/32 | enp3s0f1 | firewalled public interface |

| 3 | N/A | ?.?.?.?/?? | enp4s0f0 | TBD |

| 1 | br1 | 192.168.31.159/24 | enp4s0f1 | internal / admin lan |

| ilo | 192.168.31.119/24 | remote console |

As Drawn

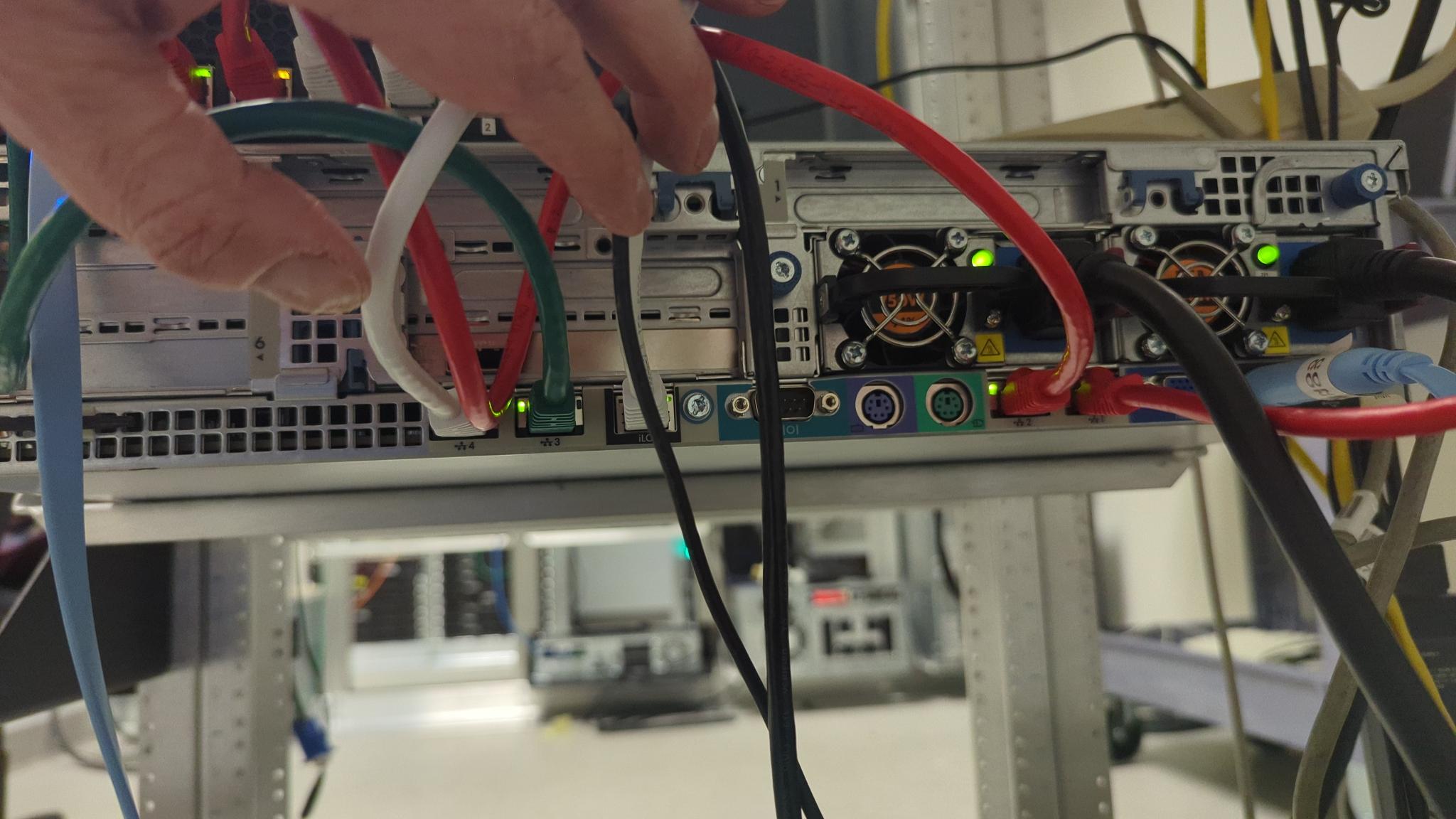

As Deployed

As implimented

in /etc/network/interfaces

#--------------------------------------------------------/etc/network/interfaces

# 2: enp3s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br0 state UP group default qlen 1000

# 3: enp3s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq master br1 state UP group default qlen 1000

# 4: enp4s0f0: <BROADCAST,MULTICAST> mtu 1500 qdisc noop state DOWN group default qlen 1000

# 5: enp4s0f1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc mq master br3 state UP group default qlen 1000

# https://ip4calculator.com

source /etc/network/interfaces.d/*

auto lo

iface lo inet loopback

iface enp3s0f0 inet manual

iface enp3s0f1 inet manual

iface enp4s0f0 inet manual

iface enp4s0f1 inet manual

auto br0

iface br0 inet manual

bridge_ports enp3s0f0

bridge_stp off # disable Spanning Tree Protocol

bridge_waitport 0 # no delay before a port becomes available

bridge_fd 0 # no forwarding delay

auto br1

iface br1 inet static

address 192.168.31.159

network 192.168.31.0

netmask 255.255.255.0

broadcast 192.168.31.255

bridge_ports enp4s0f1

bridge_stp off # disable Spanning Tree Protocol

bridge_waitport 0 # no delay before a port becomes available

bridge_fd 0 # no forwarding delay

EOD

and in /etc/rc.conf

ifconfig_igb4="69.41.138.126 netmask 255.255.255.224"

defaultrouter="69.41.138.97"

#ifconfig_igb0="inet 192.168.31.2 netmask 255.255.255.0"

cloned_interfaces="bridge0"

ifconfig_bridge0="inet 192.168.31.2 netmask 255.255.255.0 addm igb0 addm igb1 up"

ifconfig_igb0="up"

ifconfig_igb1="up"

As referenced

See: https://bitbucket.org/suspectdevicesadmin/ansible/src/master/hosts which is built referencing a google doc with proposed allocations

Server OS, Filesystems and Disk layout

The server runs Debian bookworm along with zabbly supported version of incus. Outside of zfs not much is added to the stock installation. This is intentional. The real work is done by the containers the host os is considered disposable.

Disk Layout

The incus server uses hardware raid 1 for the boot disk. The containers and other data are a able to take advantage of zfs mirroring and caching.

| kb2018 disks | |||||

|---|---|---|---|---|---|

| disk | device/pool | bay | type | mount point(s) | purpose/notes |

| sdb | /dev/sdb | 2C:1:3 | raid1+0 | /, /var/lib/incus | os and incus data |

| 2C:1:4 | raid1+0 | ||||

| sda | infra, devel | 3C:1:7 | zfs | incus storage pools | |

| sdg | 3C:1:8 | zfs mirror | |||

| sdd | tank | 3C:1:5 | zfs | /tank | space for stuff |

| sdc | 3C:1:6 | zfs mirror |

Hardware raid on the DL380

The raid controller on the Dell allows a mixing of hardware raid and direct hot swappable connections. The HP 420i does only hardware raid or direct connections (HBA) but not both. Since we use the hardware raid the remaining disks need to be configured using the ssacli or the raid controllers bios.

Containers

Work previously done by standalone servers is now done though incus managed containers. An up to date list of containers is somewhat maintained at https://bitbucket.org/suspectdevicesadmin/ansible/src/master/hosts

Ansible

Ansible is used to make most tasks reasonable including. * creating containers * updating admin passwords and ssh keys.

Tasks: Accessing Hosts

tk2022 ssh access

The host machines for the containers can be accessed through the admin lan. This is done via wirguard on either sitka or virgil

note: as of a few updates ago you have to tell apples ssh client to use ssh-dss as below

YOU ARE HERE update the ilo settings so they report the right server.

steve:~ don$ ssh -p22 -oHostKeyAlgorithms=+ssh-dss feurig@tinas-ilo.suspectdevices.com

feurig@192.168.31.119's password:

User:feurig logged-in to kb2018.suspectdevices.com(192.168.31.119 / FE80::9E8E:99FF:FE0C:BAD8)

iLO 3 Advanced for BladeSystem 1.88 at Jul 13 2016

Server Name: kb2018

Server Power: On

</>hpiLO-> vsp

Virtual Serial Port Active: COM2

Starting virtual serial port.

Press 'ESC (' to return to the CLI Session.

tk2022 login: <ESC> (

</>hpiLO-> exit

_ if the serial port is still in use do the following _

Virtual Serial Port is currently in use by another session.

</>hpiLO-> stop /system1/oemhp_vsp1

See: ilo 3 notes page

ssh access to containers

The susdev profile adds ssh keys and sudo passwords for admin users allowing direct ssh access to the container.

steve:~ don$ ssh feurig@ian.suspectdevices.com

...

feurig@ian:~$

The containers can be accessed directly from the incus host as root

root@bs2020:~# incus exec harvey bash

root@harvey:~# apt-get update&&apt-get -y dist-upgrade&& apt-get -y autoremove

Updating dns

Dns is provided by bind , The zone files have been consolidated into a single directory under /etc/bind/zones on naomi (dns.suspectdevices.com).

root@naomi:/etc/bind/zones# nano suspectdevices.hosts

...

@ IN SOA dns1.digithink.com. don.digithink.com (

2018080300 10800 3600 3600000 86400 )

; ^^ update ^^

; .... make some changes ....

morgan IN A 198.202.31.224

git IN CNAME morgan

...

root@naomi:/etc/bind/zones# service bind9 reload

root@naomi:/etc/bind/zones# tail /var/log/messages

...

Sep 3 08:10:04 naomi named[178]: zone suspectdevices.com/IN: loaded serial 2018080300

Sep 3 08:10:04 naomi named[178]: zone suspectdevices.com/IN: sending notifies (serial 2018080300)

Sep 3 08:10:04 naomi named[178]: client 198.202.31.132#56120 (suspectdevices.com): transfer of 'suspectdevices.com/IN': AXFR-style IXFR started (serial 2018080300)

Sep 3 08:10:04 naomi named[178]: client 198.202.31.132#56120 (suspectdevices.com): transfer of 'suspectdevices.com/IN': AXFR-style IXFR ended

Sep 3 08:10:04 naomi named[178]: client 198.202.31.132#47381: received notify for zone 'suspectdevices.com'

Updating Hosts / Containers

When updates are available Apticron sends us an email. We prefer this to autoupdating our hosts as it helps us maintain awareness of what issues are being addressed and does not stop working when there are issues. All running containers can be updated using the following update script.

nano /usr/local/bin/update.sh

#!/bin/bash

# update.sh for debian/ubuntu/centos/suse (copyleft) don@suspecdevices.com

echo --------------------- begin updating `uname -n` ----------------------

if [ -x "$(command -v apt-get)" ]; then

apt-get update

apt-get -y dist-upgrade

apt-get -y autoremove

fi

if [ -x "$(command -v yum)" ]; then

echo yum upgrade.

yum -y upgrade

fi

if [ -x "$(command -v zypper)" ]; then

echo zypper dist-upgrade.

zypper -y dist-upgrade

fi

echo ========================== done ==============================

^X

chmod +x /usr/local/bin/update.sh

incus file push /usr/local/bin/update.sh virgil/usr/local/bin/

incus exec virgil chmod +x /usr/local/bin/update.sh

for c in `incus list -cn -f compact|grep -v NAME`; do incus exec $c update.sh; done ; update.sh

This could also be used as an ansible ad hoc command.

ansible pets -m raw -a "update.sh"

https://bitbucket.org/suspectdevicesadmin/ansible/src/master/files/update.sh

Creating containers

cd /etc/ansible

nano hosts

... add new host ...

ansible-playbook playbooks/create-lxd-containers.yml

Backing Up Containers

YOU ARE HERE RETHINKING THIS